Caching is one of the structures that significantly increases the performance of web applications. Its purpose; It is to present frequently used data to the user quickly, without the need to connect to the database each time. What is done here is to pull the data from the server once, save it temporarily in the RAM of the server where the application is located, and use it. In short, we cache a stable and frequently used data when it is first obtained, and then send this cached data on every request. But we should not forget that; The data we cache is not our original data. It is a copy of them.

Sample image showing how server-side caching works – Source: [1]

So what happens if we don't use this structure?

Whenever data is needed in an application, a request is made to the server. In systems with a large user base and a lot of requests, encountering this situation will cause serious slowness for the user and huge congestion for the server side.

What happens when there are changes to the original data?

As I said above, this data we get from RAM is not original data. Therefore, when there is a change in the original data, our data will become outdated. As a solution to this, the data should be destroyed at the periods determined in the configurations and the updated data should be requested from the server again.

What are the types of caching?

Caching is evaluated under two main headings. These are Local Caching (In-Memory Caching) and Global Caching (Distributed Caching).

Local Caching (In-Memory Caching):

Also called Private Caching. It is the process of keeping application-related data in RAM memory on the server hosting the application. The cache size it can hold is directly proportional to the RAM of the application server.

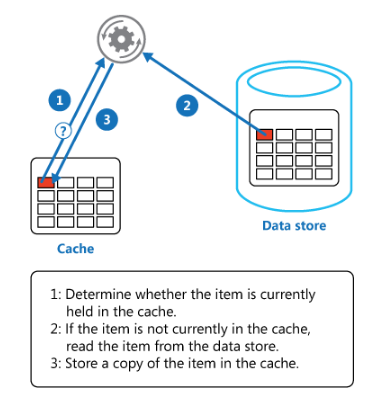

Source: [4]

In the image above, the steps I mentioned are taking place;

Step 1: Search for data in cache.

Step 2: If data exists in cache, return it.

Step 3: Search for data in the data source if it does not exist.

Step 4: Load data into cache and return it to the user.

If our application runs in a single state, we will not have a problem with this method.

What if we are working with more than one state?

If there is more than one state in our application and the load balancer distributes the incoming requests to these states according to density; A user making a request to the application may access different caches at different times. In this case, the user sees different data with each request. Now let's examine this incident in more detail.

Usage example of In-memory caching in application in different situations – Source: [2]

When we look at the example above, we see that; Applications A and B use a common database. A performs In-Memory Caching upon request received at time X, while B performs In-Memory Caching upon request received at time Y. If the data is the same in both time periods, we will not have any problems. But if the data is different; The results we get from the same request will be different from each other. Which is a big problem.

Isn't there a solution to this problem?

There is a partial solution to this problem. This is to use the “Sticky Session” feature via “Load Balancer”. With this feature, whatever instance the user is sent to first is sent to the same instance in all subsequent requests. It achieves this by redirecting based on Sticky Session, cookie or IP. It is not a definitive solution. Because if there is an inconsistency, we will just cover it up. Therefore it is not recommended.

Global Caching (Distrubuted Caching):

Also called Shared Caching. The data to be cached is kept in a completely separate cache service, not in the RAM of the server where the application is run.

The fact that the data to be cached is kept independent of the application's server ensures that the data is kept securely in case of a possible interruption. On the contrary, in In-memory Caching, the entire cache is deleted in the event of a malfunction in the server.

Shared Caching usage example – Source: [2]

As we saw above; All instances use the same cache service. Therefore, we do not experience the inconsistency we experienced in In-Memory Caching here.

What are the benefits of caching to our application?

- Reduces unnecessary traffic on the server.

- Provides a better user experience.

- The speed and performance of our application increases. Since memory is much faster than disk, reading data from cache is extremely fast. This significantly improves the overall performance of our application.

- Reduces database cost. A cache can provide a large number of IOPS (input/output operations per second). In this way, it reduces the total cost by taking over the task of several databases.

- Thanks to caching, it can serve content to end users even when the content is not available from the source servers for a short time.

What are the use case examples of caching?

The following examples can be given as examples of the use of caching;

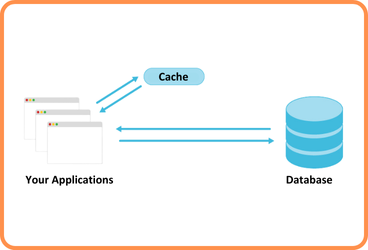

Database Caching:

Database Caching usage example – Source: [5]

Database Caching is a buffering method that temporarily stores frequently queried data in memory. The performance of the database in terms of speed and efficiency is one of the most effective factors on the overall performance of applications. Its purpose is to help by easing the load that the primary database can carry. A database caching layer can be implemented in front of any database, including relational and NoSQL databases.

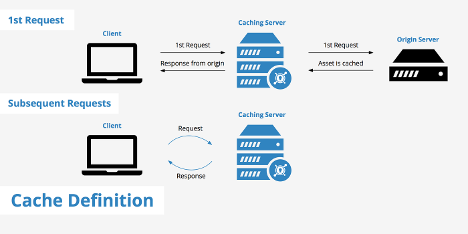

CDN (Content Delivery Network) Caching:

A CDN is a group of geographically distributed servers that speeds up the delivery of web content by bringing it closer to where users are located. In order to reduce response time, CDN caches and stores content such as web pages, images and videos pulled from the main server in line with the user's request on its own server.

DNS (Domain Name System) Caching:

DNS cache temporarily stores information about previous DNS searches made on a machine's operating system or web browser. A local copy of the call is kept, allowing the operating system or browser to quickly access it the next time the call is made.

Resources:

[1] - https://www.keycdn.com/support/cache-definition-explanation

[2] - https://docs.microsoft.com/en-us/azure/architecture/best-practices/caching

[3] - https://aws.amazon.com/caching/

[5] - https://www.prisma.io/dataguide/managing-databases/introduction-database-caching

Back

Back