ChatGPT, developed by OpenAI and equipped with natural language processing technologies, is a language model built on the GPT (Generative Pre-trained Transformer) architecture.

In 2023 and 2024, one of the most important developments was undoubtedly Artificial Intelligence.

It is remarkable and hard to understand how ChatGPT generates text like humans. If you have spent some time with ChatGPT, you will see that you get various responses based on pure information and commentary. How does it achieve this? Before finding an answer to this fundamental question, I should note that the engineering and knowledge behind ChatGPT are one of the most effective reflections of engineering, encompassing most things from philosophy to the human brain.

I want to mention that my writing can be long and tiring. Even though I will take a bird's eye view of what is happening, I will talk about some engineering details. Additionally, there will be sections whose details I will leave for the reader to research. In my writing, there are visualizations in Wolfram Language and Python Language using the GPT-2 model. Again, throughout my writing, I will base it on OpenAI's ChatGPT. Perhaps in the future, such a company will no longer exist due to competition or other reasons - after all, 10 years ago, OpenAI was an organization established to research the future, possible risks, and ethics of Artificial Intelligence, and it was "non-profit." Therefore, ChatGPT will only serve as an example. My aim is to "superficially" convey a rough outline of what is happening behind ChatGPT and then research how and why these happenings are so successful. Although we have some things in practice, there are points we do not understand even in theory.

The first thing I need to mention, and essentially the most fundamental thing, is that ChatGPT's main function is to generate a reasonable continuation of a given text. By "reasonable," I mean the possible continuation that anyone might expect from the written text.

For example, let's have the sentence

ChatGPT can scan billions of pages of text and find examples similar to this text. However, this process searches for "elements" that match in terms of meaning rather than understanding the actual text. Artificial intelligence can determine how frequently certain words appear in the text and then produce a sequential list of words that are likely to follow the text.

Reducing all random activity in the real world to…

The point that needs to be emphasized is that when ChatGPT starts writing text, what it actually does is continuously calculate the probability of how the continuation will be based on the text so far and add a new word each time.

Okay, we have a list of probabilities for what the next word might be, but which one should we choose? At first glance, we might think we should choose the one with the highest probability, but we shouldn't. Because if we always choose the word with the highest probability, our text will be mathematically perfect. It will be a simple text that never shows creativity (even sometimes repeating itself). If we sometimes choose the words with lower probabilities, it will become more "creative." When randomness is involved, we can get different texts even if we use the same prompt multiple times.

We have a parameter called "temperature" that indicates how often low-probability words will be used. It has been determined that the most effective value for generating text is "0.8". The point I want to emphasize here is that there is no theoretical basis for this (at least for now, or at least something we don't know yet); it is merely a matter of what works in practice. The lack of a theoretical basis does not mean it is wrong; we can see this with exponential distributions, which we are familiar with from theoretical physics.

For now, let's accept the language model I will use as a black box. Let's ask the model to bring the 5 words with the highest probability.

Let's make it easier to understand

Let's take a look at what you add to the sentence each time.

If we continue this textually at 0 temperature, what result do we encounter? It gets pretty pointless and repetitive

What if we don't want it to always select the words at the top (increase the temperature value)?

Now, no matter how many times you type the same prompt, you will encounter different results.

At a temperature value of 0.8, a large number of possible next words are available. There is a linguistic feature known as the n−1 power law. In the general statistics of a language, the probability of a word following the previous word decreases according to a power function pattern. This decreasing pattern is very characteristic; it appears as a straight line on a logarithmic scale, indicating that the rate of decrease in probabilities is regular and pronounced. (Zipf’s Law loglog )

Providing Possibilities

Alright, we've seen what ChatGPT can do at the word level. Now, how can we calculate the probability of a letter?

Without deviating from basic logic, we can calculate this by taking any text, observing the letter probabilities, and making small adjustments.

If we choose any word and count the letters within it, for example, for the word “book”, our output within a Wikipedia article would look like this:

![]()

This list will vary depending on the word we provide, and undoubtedly, the letter 'o' will be more common within the article because it appears in the word "book".

Let's create a random string of letters using this list.

![]()

Let's add spaces as if these were meaningful words.

![]()

Let's take into account that the distribution of word lengths is the same as in English (On the other hand, it can also mean how often spaces are used or can be taken into account as a second parameter)

![]()

We still haven't gotten any real words. Even though what we have done so far contains probability, it is still not enough, we need to do more than just randomly placing letters.

Let's calculate the probabilities of the letters on their own.

And there is a 2-gram graph showing the probabilities of letter pairs. (2-grams, often referred to as "bigrams", allow us to examine two consecutive letters together. This helps us understand how and how often letter pairs are used together in text analysis.)

As seen in the graph, in English, the letter “u” usually follows the letter “q”. There are very few words with a different letter following “q” (in fact, these are special words. For example, "Qatar" or words borrowed from other languages). This means that the use of “q” without the letter “u” following it is almost non-existent. This is an example of the inference we make from looking at this graph, and we continue to make the probability spectrum more consistent. Taking into account the 2-gram graph and other values, our sentence containing meaningful words created is:

![]()

As the source increases, the efficiency we gain will also increase. If we create new n-grams that complement each other using n-gram probabilities, we will achieve better outputs. Here are some examples:

There are approximately 40,000 words used in English. If we look closely enough at a digital library and books (let's assume several million books hosting billions of words), we can obtain much more efficient “sentences” by determining which words follow which others and their probabilities of appearance. Below, I have given an example of this inference.

![]()

It's expected that the sentence would be meaningless. How can we improve upon this? Just as with letters, if we consider not only individual word probabilities but also the probabilities of word pairs, and even longer n-grams, it's clear that longer n-grams would yield better results. With these thoughts in mind, one can imagine “a ChatGPT” but even a 2-gram of each word reaches into the billions. There hasn't been enough English text written to uncover these probabilities by using only probabilities to create a “proper” composition. When we reach a 20- word “text fragment”, the number of probabilities exceeds the number of particles in the universe.

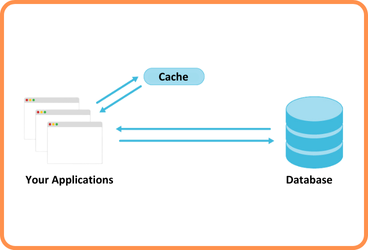

Taking a step further, at this point, there exists a concept called “Large Language Model (LLM)” that excels precisely in predicting these probabilities.

MODEL

A model is a mathematical structure capable of learning from complex and extensive datasets to perform specific tasks. Language models, for instance, learn the structure and connections of a language by analyzing large amounts of text data. They then use this learned knowledge to

perform various tasks such as text generation, question answering, and translation. In summary, while the term “model” generally refers to a general structure or algorithm trained for a specific task, “Large Language Model (LLM)” refers to specific models trained on large datasets with broader linguistic knowledge and capacity.

Let me explain what a model is with an analogy: Imagine we want to know when a rose dropped from each floor of the Leaning Tower of Pisa will hit the ground, just like Galileo did in his time. You could drop roses from each floor and create a table of results. Alternatively, adhering to the essence of theoretical science, rather than measuring each scenario, we create a model that provides a procedure to calculate the answer.

Let's say we have an idealized data graph for when a rose drops from each floor.

Now, suppose we want to calculate how long it takes for a rose dropped from a position we have no data about.

Is there a way to make this calculation without knowing anything? We would use the laws of physics, of course, but let's assume we don't know the underlying law.

Yes, if we draw a line following the points as an answer, we can estimate the average time it takes for a rose to fall from any floor, as you know.

We're accustomed to the fact that mathematical measurement fits well with simple things. For this line, if we say a + bx + cx2, it may fit well for this graph.

However, if we use a + b/x + csin(x), we get a wavy image.

The reason I give this example is to emphasize that there is never a “modelless model”. Because we are not drawing the line on a blank sheet of paper. Every model has underlying logic, requirements, and knobs to adjust. ChatGPT uses approximately 175 billion of these.

The interesting point is that, as mentioned above, ChatGPT is capable enough with just these parameters to calculate the probabilities to create a "reasonable text" by giving us "the next word."

Visual Model

In the example of modeling, a model derived from the mathematical data I provided is presented. However, such models alone are not sufficient to mimic human language. The complexity and depth of human language cannot be fully captured with existing mathematical models. Therefore, visual models have been used to better understand and mimic the nature of language.

Consider recognizing digits. One of the best things we can do is to find different ways these digits are written and expand the sample space.

Afterwards, to distinguish and recognize digits, we can compare pixel by pixel and obtain some results. However, humans seem to be able to do much better. Even if digits are written in handwriting, we can still recognize them, even with various distortions.

If we want to create a model for the numerical list above, can we create a model that tells us

Which digit it belongs to by looking at the grayscale, black, and white pixel values? Yes, it turns out this is possible, and not surprisingly, it's not simple. A small example could involve half a million mathematical operations. Nevertheless, we can find the number by feeding it into any function. The idea of creating such a function is inspired by the human brain and is called "Artificial Neural Network."

Let's consider which handwritten digit corresponds to which number and treat the calculations of the pixel values we feed into the images as a black box for now.

Before diving into Artificial Neural Networks, one point to consider is this: if we continuously blur a digit taken from a handwritten string of numbers, it will eventually start giving incorrect results. What is the limit here? What actually happens that causes us not to recognize it anymore?

However, if our goal is to create a model that mimics what humans can do in recognizing images, the real question to ask is what a person would do if presented with one of these blurred images without knowing where it came from. Do we have a mathematical model or data to confirm its accuracy? We don't even have the data. Since there is no mathematical model or data available, we become the criterion for verification here. If our machine can identify the digit up to the point we can identify it and in the manner we can identify it, then we can say we have a good model.

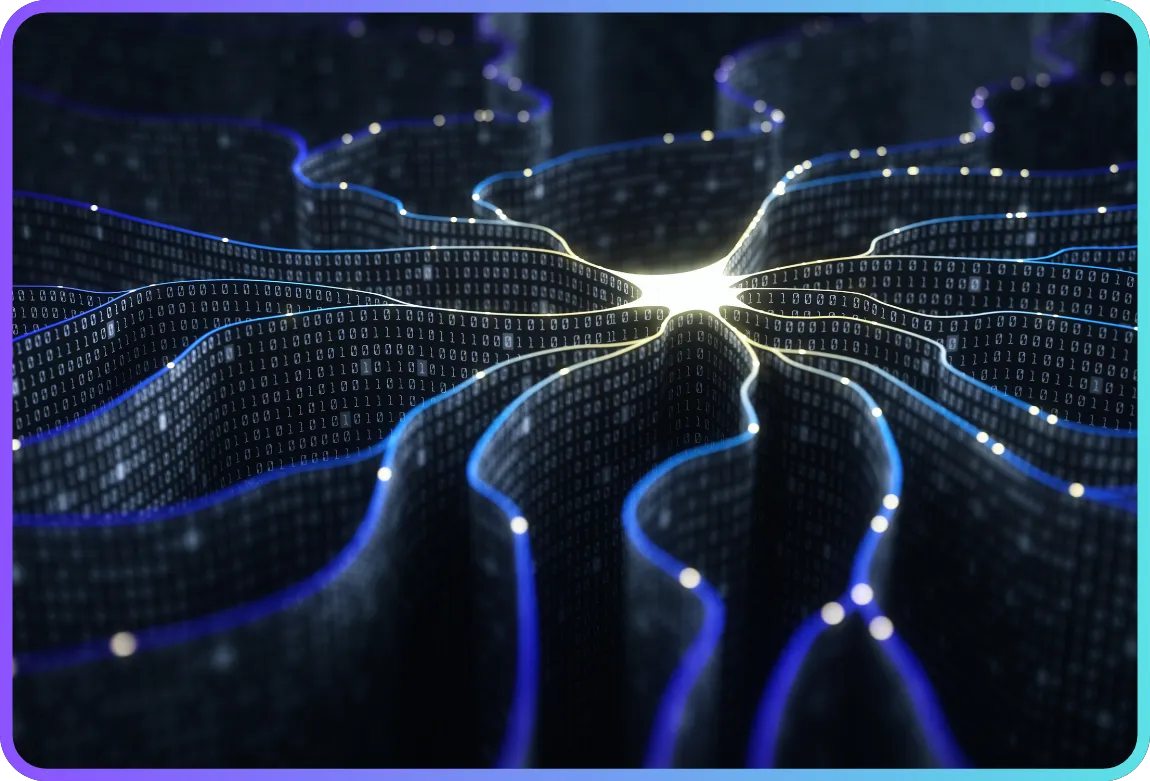

Artificial Neural Networks

Artificial Neural Networks (ANNs) are computer-adapted versions of the magnificent self- learning structure found in the human brain, as mentioned above. It's a model of the brain. Our goal is clearly to activate and develop existing neurons, transforming the input given into a decision-making mechanism.

Let's recall the neuron structure in the brain:

The axon serves as the conducting channel for transmitting generated pulses and outputs. Dendrites, on the other hand, are receptors that receive signals from other neurons. Synapses are structures that connect two neurons.

In this chemical structure, the cytoplasm inside the neuron is polarized at−85mV . If this value is −40mV , "Excitation" occurs, and if it is −90mV , "Inhibition" is applied (it's clear that There is a threshold value for excitation). This is a basic description of neuronal excitation at its most fundamental level.

Now let's come to the modeling equivalent of this structure.

Ensemble Methods for Pedestrian Detection in Dense Crowds - Scientific Figure on ResearchGate.

This is the mathematical model of a neuron you see.

x is the input, ω is the weight (needed for the threshold), and b is the bias value (which allows shifting of the activation function; the reason being that learning does not occur when the input value is '0'. The bias value is a parameter used to keep input values different from zero.)

Below is a 3-layer Artificial Neural Network (excluding the input and output layers). ChatGPT uses approximately 175 billion neurons from these.

The calculation result of 4x5 + 5x5 + 5x5 + 5x5 + 5x3 gives us ω(Weight value).

5 + 5 + 5 + 3 gives us the value b(bias).

This discusses how ω weights are determined, what the layer count will be, how artificial neural networks are trained, and their relationship with performance, scalability, and optimization.

Those wishing to delve deeper can explore the topic of Neural Networks. How can such a neural network “recognize” images? The concept of “Attractors” pertains to how neural networks operate and can provide deeper insights into how they learn from specific datasets. The concept of Attractors helps us understand how a network evolves towards certain goals or outcomes and behaves during this process.

Embeddings

The concept of embedding is the representation of a word or phrase in Euclidean space. This representation is designed to capture the meaning of the word and maps the word to a point in Euclidean space. As a result, the meaning of the word can be compared with other words in vector space, making it a technique used to capture semantically similar sentences.

Important Topics in Machine Learning That Every Data Scientist Must Know | by Trist'n Joseph | Towards Data Science

In Euclidean (can be Vector) space, we can consider every point as words or phrases that are semantically close to each other. For instance, the words “book” and “dictionary” might be close together, while “book” and “stone” could be far apart. This usage allows us to navigate through semantically related words without losing context, aiding in the “appropriate” selection of the “next word”. The technique we apply assigns a number to each word, known as “Classification”, which is two-dimensional.

In ChatGPT, a three-dimensional classification is used. The advantage of using a three- dimensional classification is its ability to capture relationships between features in the dataset more effectively. Below is an example of a three-dimensional representation.

In this Euclidean space, if we assume that ChatGPT navigates in search of the next word that matches in meaning and context, and if we represent this navigation between points using vectors, we do not clearly adhere to a geometric law here. This lack of a law is far from being transparent, and the internal behaviors ChatGPT acquires in generating human-like text remain empirically unsolved (at least for now).

Miracle of Production

In essence, ChatGPT follows the steps of understanding as follows: it takes in a specified prompt and obtains an embedded numerical array that represents them. Subsequently, it operates within an artificial neural network during the learning process, where the weights of the network change to generate a new embedded numerical array. It then calculates probabilities for possible next words based on this new array.

A critical point is that each part of this pipeline is implemented by a neural network whose weights are determined through end-to-end training. In other words, nothing is explicitly designed “outside of the general architecture”; everything is simply “learned” from the training data.

If we were to examine why it chose a particular path in this decision-making process, we couldn't do so due to the complexity of the large matrices and the self-learning nature of the neural network. Ultimately, even though we are the ones coding the artificial neural network, we do not fully understand it. If we were to step back and look at this large matrix, we might perhaps see brain activity measured with fMRI, who knows.

So, while I've explained at a basic level how ChatGPT constructs human-like sentences and structures using the simple steps I've shown above, it's quite astonishing because for such a network to follow and apply all the grammatical rules and intricacies across multiple languages is surprising in any case. Because, as mentioned above, for it to “speak” and “understand” things like we humans do, without us telling it the rules of language to follow and apply, and for it to somehow “solve” these things internally with its own learning analogy, is remarkable from any perspective.

Computational Irreducibility

Generally, I discussed the flow diagram of ChatGPT, mentioning the topics covered by the algorithms. Lastly, the term “Computational Intractability” is typically used for situations where a complex system or model cannot be fully reduced to a simple or summary form. This implies that computations may not be expressible in a simpler form due to inherent difficulties in the nature of the model or system (e.g., Big Data Analysis, Quantum Mechanics, Numerical Simulations).

Performing any mathematical calculation requires a special effort from the human brain. Computers are better than humans in this regard, so we prefer to leave them the reducible but challenging computations. However, there are special cases that even computers cannot compute (e.g., the Traveling Salesman Problem (TSP)). The issue with intractable computations is that unless we explicitly calculate every “special” case we think won't happen, we cannot guarantee what is happening.

There exists a fundamental and non-trivial correlation between the learnability, trainability, and computational capabilities of the system. If you want the system to utilize its computational capabilities to the fullest, this desire will generate an equivalent amount of computational intractability because it will have to produce every output of the desired situation, making it untrainable. Therefore, like flipping a fair coin and expecting a mathematical result of ½ in theory, it never actually equals exactly ½ in practice. Even the term “fair” used in mathematics is actually drawing a line to prevent intractable computations.

The most important conclusion to draw from here is that in today's world, tasks such as writing articles, which we think computers cannot do but humans can, are actually easier in terms of computation. Dealing with language and writing articles has become a “shallow” problem in computational terms, bringing us closer to grounding language in theory. Language here is a mandatory example within the context, with potential areas of development and certainly promising areas awaiting Artificial Intelligence support to possibly be resolved.

In the not-so-distant future, Artificial Intelligence will help us understand it better, just as we will understand it. What allows us to exceed the boundaries accessible to “pure, unaided human thought” in the last century and achieve more in physical and computational environments for human purposes is the use of both practical and conceptual tools. Universe.

Sources:

- Wolfram Neural Net Repository - resources.wolframcloud.com/NeuralNetRepository/resources/LeNet-Trained-on-MNIST-Data/ Convolution Layer reference.wolfram.com/language/ref/ConvolutionLayer.html

- Future Internet | Free Full-Text | ChatGPT and Open-AI Models: A Preliminary Review. (n.d.) www.mdpi.com/1999-5903/15/6/192

- “ChatGPT — The Era of Generative Conversational AI Has Begun” (Week #3 - article series). (n.d.) www.linkedin.com

- How Does ChatGPT Actually Work? An ML Engineer Explains. (n.d.) www.scalablepath.com How to build a Sustainable ChatGPT Server Architecture?. (n.d.) www.diskmfr.com ChatGPT, the rise of generative AI. (n.d.) www.cio.com

- How ChatGPT gets Stitched with Cloud Services?. (n.d.) itibaren itechnolabs.ca

- ChatGPT Prompt Engineering: Crafting Better AI Dialogues. (n.d.) itibaren www.pixelhaze.academy/blog/chatgpt-prompt-engineering

- How ChatGPT works and AI, ML & NLP Fundamentals | Pentalog. (n.d.) www.pentalog.com/blog/tech-trends/chatgpt-fundamentals/

- What Is ChatGPT Doing … and Why Does It Work? (n.d.) https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

- Chapter 6: Starting from Randomness www.wolframscience.com/nks/chap-6--starting-from- randomness/#sect-6-7--the-notion-of-attractors

- ChatGPT: Understanding the ChatGPT AI Chatbot. (n.d.) www.eweek.com/big-data-and- analytics/chatgpt/

- Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy -

- ScienceDirect. (n.d.) www.sciencedirect.com/science/article/pii/S0268401223000233

- Chapter 12: The Principle of Computational Equivalence (n.d.)

- www.wolframscience.com/nks/chap-12--the-principle-of-computational-equivalence/

- How ChatGPT actually works (n.d.) https://www.assemblyai.com/blog/how-chatgpt-actually- works/

- https://finnaarupnielsen.files.wordpress.com/2013/10/brownzipf.png?w=487

- https://preview.redd.it/snny9gfpqpdz.png? width=960&crop=smart&auto=webp&s=dc161cb38f6f5ecdaea3d6bcd263a0ec824cec80

- https://course.elementsofai.com/static/4_1-mnist-9fe2c1b5d633ca5e9d592a0e0b8262d4.svg

- https://www.researchgate.net/figure/Neuron-structure-in-the-human-brain-Reprinted-with- permission-from-4_fig2_317028037

- Ensemble Methods for Pedestrian Detection in Dense Crowds - Scientific Figure on ResearchGate. Available from: https://www.researchgate.net/figure/Mathematical-model-of-a- biological-neuron_fig7_336675545 [accessed 04 Dec, 2023]

- https://tikz.net/neural_networks/ — https://tikz.net/wp- content/uploads/2021/12/neural_networks-001.png

- https://towardsdatascience.com/important-topics-in-machine-learning-that-every-data-scientist-must-know-9e387d880b3a

- https://miro.medium.com/v2/resize:fit:640/format:webp/0*LyxxcclWH3_zY2u3.gif

Back

Back