This blog post is a guide for anyone new to artificial intelligence (AI) or who has heard some concepts but doesn’t fully understand how they are used. It will explain AI concepts at a basic level and also introduce a powerful AI technology called large language models (LLM). Let's start with some foundational concepts.

Figure 1. AI Layers

Artificial Intelligence (AI)

AI is a general term that describes the ability of computer systems to exhibit human-like intelligence. AI can solve complex problems using large datasets, powerful computing resources, and advanced algorithms. AI can be divided into two main categories:

- Narrow AI: Refers to systems designed to perform specific tasks. For example, voice assistants like Siri or Alexa, facial recognition systems, and recommendation engines are applications of narrow AI. These systems are optimized for specific tasks and cannot be used for others.

- General AI: This refers to AI that has achieved a general intelligence level equivalent to that of humans. Such AI will have the ability to perform a wide variety of tasks, just like humans. However, this level of AI has not yet been developed and is still in the research phase.

Machine Learning (ML)

Machine learning is a branch of AI that enables computer systems to learn from data. This means that with large datasets, algorithms learn certain patterns and can make more accurate predictions in the future based on what they have learned. Machine learning is a core building block of AI and is used in various fields.

How Does Machine Learning Work?

Machine learning works by allowing algorithms to learn patterns and relationships from data. After training on data, these algorithms can make predictions based on new data. The main goal is to analyze the features in the data and establish a relationship between inputs and outputs. This process typically involves the following steps:

- Data Collection: Gather the data needed to train the model.

- Data Preprocessing: Clean the data, correct missing or erroneous information, and transform it into a format suitable for the model.

- Model Selection: Choose the most appropriate machine learning algorithm for the problem.

- Model Training: The model starts learning patterns from the dataset. The model's performance is measured with various metrics.

- Model Evaluation: The model’s accuracy and generalization ability are evaluated with test data.

- Prediction and Decision Making: Once trained, the model can make predictions and decisions based on new data.

Types of Machine Learning

Machine learning is generally divided into three main categories:

- Supervised Learning: In supervised learning, the training data is labeled with both inputs and corresponding correct outputs. The model learns the relationship between inputs and outputs. The goal is for the model to predict correct outputs based on new inputs. An example would be predicting house prices based on the house’s characteristics (size, location, number of rooms).

- Unsupervised Learning: In unsupervised learning, the training data has no labels. The model tries to find meaningful patterns and relationships from only the inputs. The model aims to discover the structure of the data and group similar items or find frequently repeating patterns. An example would be grouping similar content on websites or products.

- Reinforcement Learning: In reinforcement learning, a model makes decisions in an environment and receives rewards or penalties based on its actions. The goal is to learn correct strategies over time. The model learns which steps to take to get the highest reward. An example is an AI playing chess and learning the best moves.

Machine Learning Algorithms

There are many different algorithms used in machine learning. Here are some of the most popular ones:

- Linear Regression: Used to predict continuous variables. It learns the linear relationship between inputs and outputs.

- Logistic Regression:Used for binary classification problems (yes/no).

- Decision Trees: Learns the relationships between inputs and outputs hierarchically. The data is split into branches based on various rules.

- Support Vector Machines (SVM): Tries to find the boundary that separates the data and is used for classification problems.

- K-Nearest Neighbors (KNN): Classifies or predicts new data by looking at its closest neighbors.

- Clustering: Groups data into unspecified clusters.

- Random Forest: Uses multiple decision trees to make more accurate predictions.

Machine learning is a powerful tool for extracting meaningful information from large datasets and making decisions without human intervention. In many applications, it can make automatic decisions and predictions in place of humans. The model can improve itself over time as it receives new data and produce better results. However, machine learning also comes with some challenges:

- Data Requirements: Effective machine learning models require large and high-quality datasets.

- Overfitting: A model can become too tailored to the training data, leading to poor performance on new data.

- Algorithm Selection and Tuning: Choosing the right algorithm and correctly tuning its parameters can be time-consuming and challenging.

Deep Learning (DL)

Deep learning is a subset of machine learning that utilizes artificial neural networks designed to understand complex data structures. It processes large amounts of data, extracts patterns, and is used for tasks such as classification or making predictions. Typically, deep learning is implemented through multi-layered neural networks, and it excels when given sufficient data and computational power.

The foundation of deep learning lies in artificial neural networks (ANNs). These networks consist of layers where each layer processes input from the previous one and passes it to the next. They are named after their resemblance to biological neural networks in humans.

- Artificial Neural Networks (ANN): These networks are composed of three main layers:

- Input Layer: This layer receives the input data, with each node representing a feature or data point.

- Hidden Layers: These layers process the input. Neurons in these layers are interconnected, and each connection has a weight. Hidden layers learn relationships and patterns within the data.

- Output Layer: This is the layer that produces the final result, providing predictions or classifications.

In deep learning, the number of hidden layers is substantial, which is why the models are called "deep." Compared to traditional neural networks, deep learning models have many hidden layers, allowing them to solve more complex problems.

- Neurons and Activation Functions: Each neuron receives input, multiplies it by a weight, and applies an activation function. Activation functions constrain the output and make the model non-linear.

- Training and Learning Process: Deep learning models learn relationships in the data by:

- Feedforward: Data moves forward through the layers from input to output.

- Backpropagation: Errors (wrong predictions) are calculated and propagated back through the network to update the weights. The goal is to minimize the error by optimizing the model with each step.

- Loss Function: This function measures the difference between the model’s predictions and actual results. Deep learning models continuously adjust their weights to minimize this difference.

Advantages of Deep Learning

- Automatic Feature Learning: Traditional machine learning methods often require manual feature engineering, but deep learning models learn these features themselves. This allows the model to extract more information from the data, though it requires significant computational power (e.g., GPUs).

- Works Well with Large Datasets: Unlike traditional machine learning algorithms, deep learning models perform better with large datasets, leading to more accurate results.

- Solves Complex Problems: Deep learning excels in tasks like image recognition, natural language processing, and speech recognition.

Applications of Deep Learning

Deep learning is widely used in fields such as image recognition, speech recognition, and natural language processing (NLP) and impacts our daily lives in various ways.

Deep Learning Algorithms

Deep learning includes various architectures and algorithms:

- Artificial Neural Networks (ANN): The most basic neural network structure, usually composed of input, hidden, and output layers.

- Convolutional Neural Networks (CNN): Primarily used for working with image and video data, highly effective in tasks like image recognition and classification.

- Recurrent Neural Networks (RNN): These networks handle time-series and sequential data, often used in natural language processing and speech recognition.

- Generative Adversarial Networks (GANs): Use two competing networks (a generator and a discriminator) to create new data, such as images or videos.

- Transformer Models: Widely popular in NLP tasks, with models like GPT and BERT based on this architecture.

Deep learning is recognized as one of the most powerful AI technologies today, having achieved significant breakthroughs in image, speech, and language understanding. While it continues to evolve, deep learning also presents several challenges, such as:

- High Data and Computational Power Requirements: Deep learning algorithms need vast amounts of data and powerful computational resources.

- Overfitting: The model can become too attuned to the training data, leading to poor performance on new data.

- Interpretability: Deep learning models are often referred to as "black boxes" since it's difficult to explain how they arrive at a particular outcome.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a field of AI focused on enabling computers to understand, interpret, and generate human language. NLP uses deep learning techniques to analyze, understand, and produce text-based responses in a natural language. It lies at the intersection of AI and linguistics, allowing machines to analyze, comprehend, and generate language in text or speech form.

Key Components of NLP

NLP analyzes both the syntactic and semantic structure of language through several stages:

- Syntax Analysis: Verifies if the words in a sentence adhere to grammatical rules, analyzing parts of speech (e.g., noun, verb, adjective) and sentence structure (e.g., subject, verb, object).

- Semantic Analysis: Determines the meaning of words and sentences, extracting the meaning of the overall sentence (e.g., "reading a book" implies the act of reading material).

- Morphological Analysis: Analyzes the roots, affixes, and derivations of words. For example, the word "children" is analyzed as "child" (root) and "-ren" (plural suffix).

- Sentiment Analysis: Detects whether a sentence or text conveys positive, negative, or neutral sentiments. For example, "This meal was amazing!" reflects a positive sentiment.

- Context Analysis: Resolves the meaning of words and sentences based on context. For example, "race" could mean a part of humanity or a competition between runers depending on the context.

- Named Entity Recognition (NER): Identifies entities such as people, places, or organizations in a text (e.g., "Ali went to Istanbul" identifies "Ali" as a person and "Istanbul" as a city).

- Disambiguation: Resolves the meaning of words with multiple meanings based on context (e.g., in "He ate the apple," "ate" refers to the action of eating).

- Summarization: Condenses long texts into shorter summaries while maintaining the core ideas.

- Question Answering: Provides accurate answers to questions based on provided text or information (e.g., "What is the capital of Turkey?" - "Ankara").

The Future of NLP

NLP is rapidly advancing with AI, breaking down language barriers and facilitating more natural human-machine interactions. Despite challenges such as understanding contextual differences and accounting for the uniqueness of languages, NLP continues to grow in strength, particularly with technologies like deep learning and large language models (LLMs). These technologies enhance the machine’s ability to process and understand human language, making NLP a critical component of modern AI.

Large Language Models (LLM)

Large language models (LLMs) are massive artificial intelligence models trained to perform natural language processing (NLP) tasks. LLMs are capable of generating meaningful text in response to human inputs by being trained on vast amounts of text data. Since their core focus is "language," LLMs specialize in understanding, analyzing, and generating written language. These models typically contain millions or even billions of parameters, enabling them to achieve high performance in understanding and producing language.

Key characteristics and working principles of large language models:

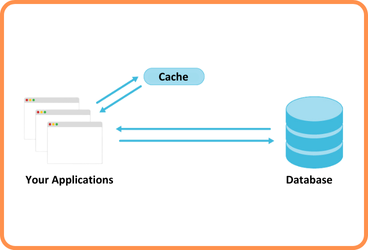

- Training Process: LLMs are trained on large text datasets (books, articles, web pages, etc.). During training, the model attempts to predict the next possible word in each sentence. This process allows the model to learn the patterns and relationships within the language.

- Transformers and Attention Mechanism: Most LLMs, especially GPT (Generative Pre-trained Transformer) models, are developed using the transformer architecture. Transformers work with an "attention" mechanism that allows the model to focus on important parts of the data. This mechanism helps the model learn the relationships between words in a sentence, enabling better understanding.

- Massive Number of Parameters: Parameters in these models are weights and coefficients that allow the model to learn the patterns and structures of the language. Large models can have billions of parameters, enabling them to capture the complexities and nuances of language.

- Fine-tuning: LLMs are often pre-trained on a broad dataset and later fine-tuned for a specific task. During this process, the model’s performance is optimized for a more specific use case. For example, a language model that has learned general language knowledge can be fine-tuned on legal texts to provide better results in the legal field.

- Versatility: Large language models can perform a variety of language tasks. These include text generation, text completion, translation, summarization, and question answering.

Common Examples of Large Language Models

- GPT-3: A large language model developed by OpenAI, with 175 billion parameters.

- GPT-4: A large language model developed by OpenAI, featuring 1.7 trillion parameters.

- BERT: Developed by Google, widely used in natural language processing tasks.

- T5 (Text-to-Text Transfer Transformer): A versatile language model from Google.

Let's briefly explain some of these models and their technologies.

Generative Pre-trained Transformer (GPT)

GPT, which forms the basis of GPT-3.5 and GPT-4, stands for "Generative Pre-trained Transformer." It was first introduced by OpenAI in 2018. This library started development long before its introduction to our lives. The development of the GPT library was completed with Generative AI, Pre-trained models, and finally transformers, introduced by Google in 2017. Each part of this LLM library is complex enough to warrant deep exploration. Let's examine each letter of the acronym.

- G – Generative AI: This refers to an artificial intelligence approach capable of generating images and text using generative AI models. These models learn the structures and patterns of the data provided to them, enabling the creation of new data with similar characteristics. The model can "inspire" new outputs, such as stories or poems, based on its input.

- P – Pre-trained: A "pre-trained" model refers to a model or neural network that has been created and trained on a large dataset by someone else to solve a similar problem. Instead of building a model from scratch, AI teams can use a pre-trained model as a starting point. Notable examples of successful large-scale pre-trained language models include BERT (Bidirectional Encoder Representations from Transformers) developed by Google and the GPT series by OpenAI.

- T – Transformer: Transformers are deep learning architectures introduced by Google in 2017. The transformer completes the final link of the LLM library. In simple terms, it breaks down input data into words and converts them into vectors by referencing a pre-built table.

BERT

BERT, which stands for Bidirectional Encoder Representations from Transformers, was developed using Google's transformer technology. It is one of the most successful approaches in NLP, created to address the challenge of limited training data. BERT can rival GPT and even outperform it in certain areas.

While large language models excel in handling complex language-related tasks, they may be limited in understanding real-world knowledge. Consequently, they can sometimes generate incorrect or illogical responses. Additionally, training and running these models require high computational power. Lastly, they may inherit biases from the data they were trained on, potentially leading to biased or inaccurate answers.

Resources

https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained

https://www.deeplearningbook.org/

https://www.ibm.com/topics/natural-language-processing

https://www.techtarget.com/whatis/definition/large-language-model-LLM

Back

Back