The Power and Danger of Paradigms

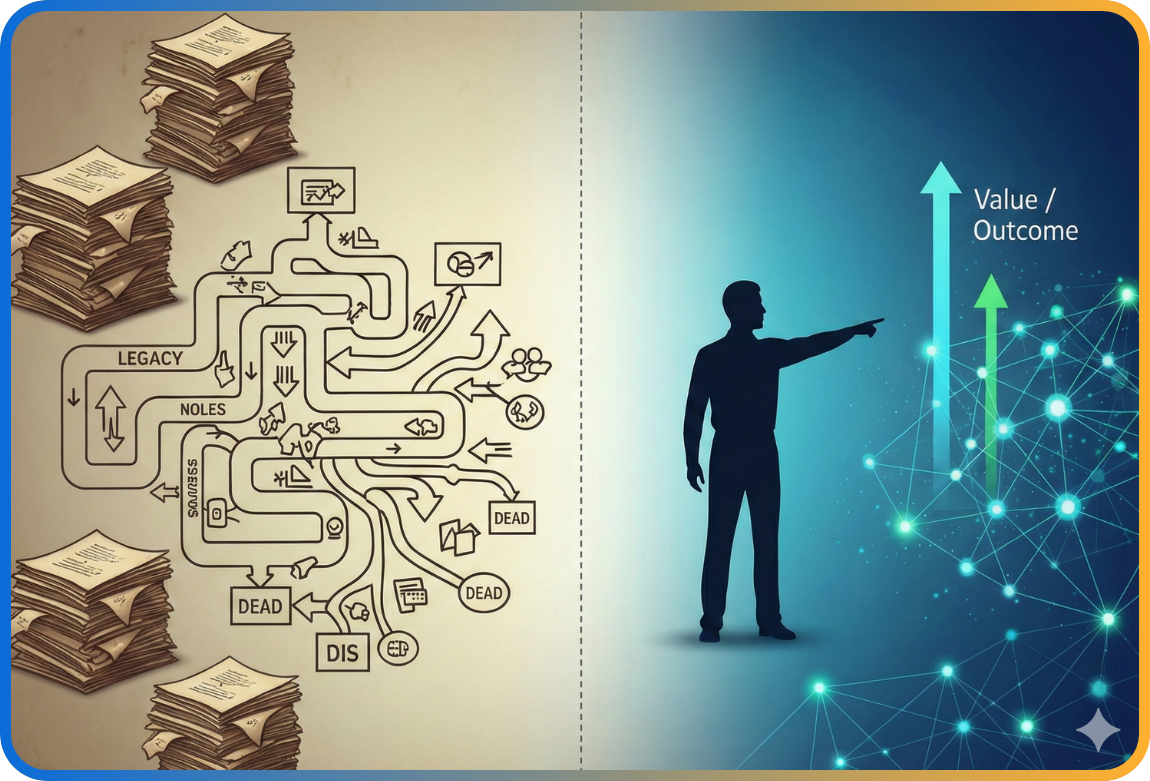

Human history is full of examples showing how entrenched mental models delayed scientific progress. One striking case is the ancient astronomers’ belief in the “perfect circle.” For centuries, it was assumed that planets revolved in circular orbits; when observations didn’t fit, complex epicycle models were invented to explain the discrepancies (Kuhn, 1962). Johannes Kepler, however, demonstrated that planets move in elliptical orbits. This discovery revealed the danger of blindly adhering to paradigms and highlighted the importance of fresh perspectives. Today, software testing faces similar risks; and in the age of artificial intelligence, paradigm traps become less visible yet more powerful.

The Traps of the Human Mind: Psychological Origins

Psychology and cognitive science show that the human mind often relies on shortcuts to make quick decisions. While these heuristics can be advantageous in everyday life (Kahneman, 2011), in complex domains like software testing they can morph into bias. The concept of cognitive economy explains how the brain conserves energy by automating thought patterns that worked in the past. While efficient, this mechanism risks overshadowing novel solutions (Kahneman, 2011). Likewise, humans tend to avoid uncertainty, leaning on past experiences even in ambiguous situations (Festinger, 1957). From an evolutionary perspective, rapid and intuitive decision-making aided survival. Yet in today’s complex software world, the same tendency fosters cognitive biases (Tversky & Kahneman, 1974).

Cognitive Biases in Software Testing

Test engineers are not immune to these mental traps. Two biases are particularly critical:

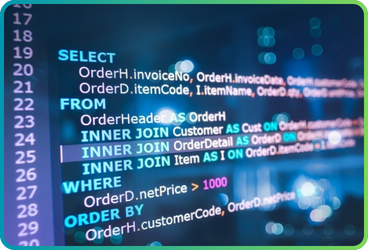

- **Confirmation Bias.** The tendency to seek evidence consistent with existing beliefs while ignoring contradictory information (Nickerson, 1998). For example, a tester validating a payment API’s performance might only test under standard traffic conditions, overlooking vulnerabilities that appear under heavy load (Runeson & Andrews, 2012).

- **Selective Bias.** The inclination to limit test coverage to easily observable or “safe” scenarios (Felderer & Beer, 2015). For instance, testing a mobile app only on the latest high-end devices excludes critical issues that may occur on lower-end hardware. When combined, these biases narrow and distort test coverage, causing rare but critical failures to slip through unnoticed (Tversky & Kahneman, 1974).

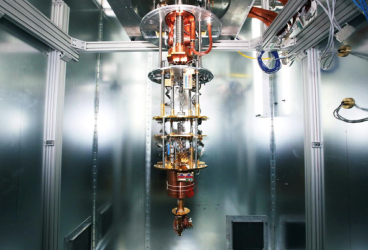

Scaled Paradigm Errors in the Age of AI and GenAI

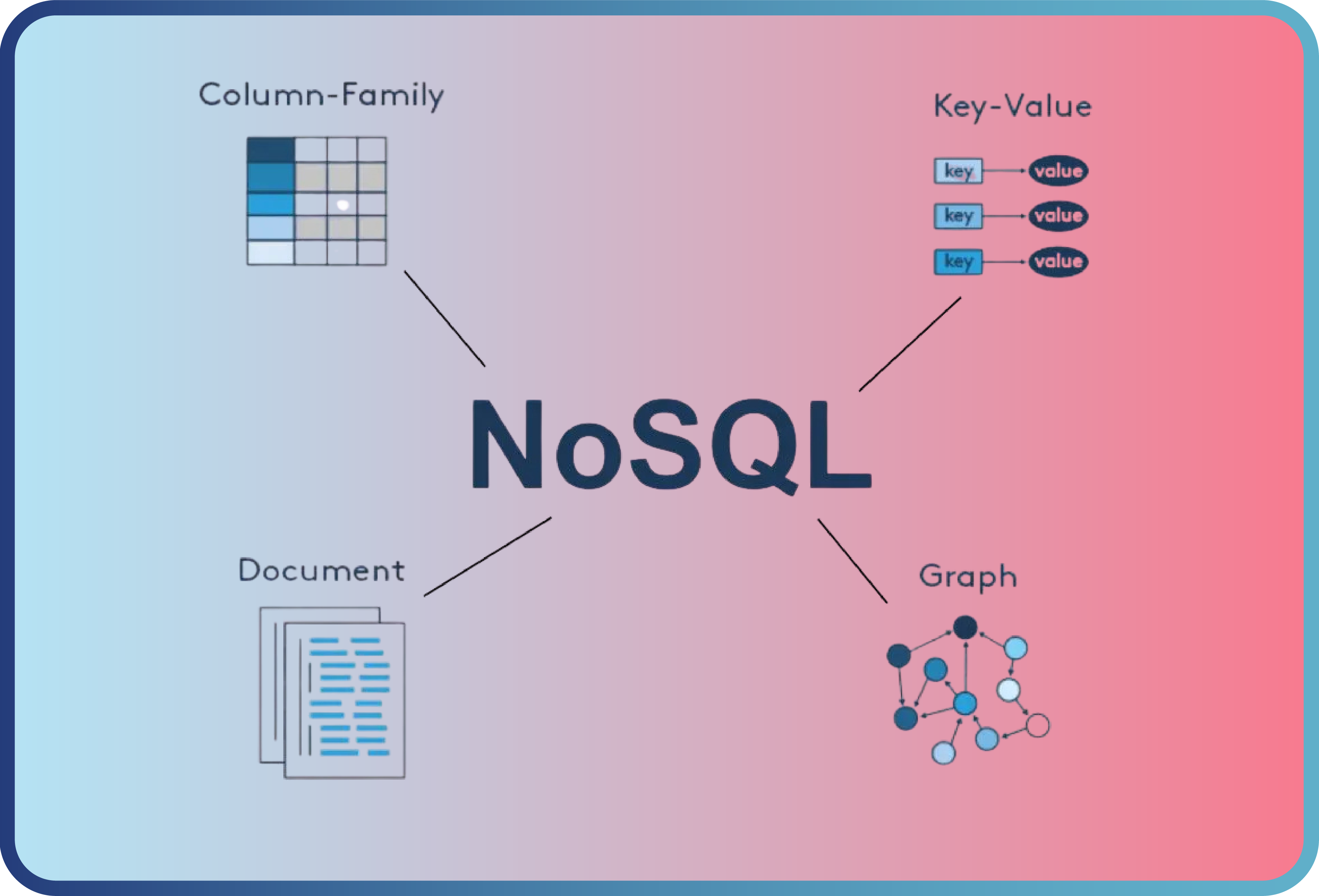

Before November 2022, AI discussions largely revolved around machine learning (ML) and deep learning (DL). During this era, biases mainly stemmed from imbalanced training data, underrepresentation of minority classes, and a lack of transparency in model decisions (Stolee & Robinson, 2020). Datasets influenced by confirmation or selective bias led to test scenarios that prioritized certain conditions while neglecting rare but significant ones (Felderer & Beer, 2015).

After November 2022, with the rise of large language models (LLMs) and multimodal generative AI (GenAI) systems, the debate shifted dramatically. Beyond data distribution issues, new risks emerged: hallucinations, reproduction of cultural biases, security vulnerabilities such as prompt injection or jailbreak, and a lack of transparency (Bender et al., 2021; Weidinger et al., 2022). In this new era, biases became less visible but more impactful. For instance, GenAI-driven tools can generate hundreds of test cases at scale — yet these cases may amplify existing biases or propagate hallucinations from the model itself (Bommasani et al., 2023). This means risks once confined to individual human errors now become systematic and large-scale in software testing.

Bias and Test Techniques from an ISTQB Perspective

ISTQB’s test design techniques make the impact of bias on software testing more concrete. Bias is not merely theoretical but a practical threat that shrinks test coverage and hides critical defects. Key techniques include:

- **Boundary Value Analysis.** Most software defects occur at the edges of input ranges. Confirmation or selective bias steers testers toward “average” cases, leaving boundary conditions unchecked (ISTQB, 2018). This increases the risk of missing the most severe defects.

- **Equivalence Partitioning.** Inputs are divided into logical partitions, and representative values from each class are tested. Cognitive biases may cause testers to overlook certain partitions altogether, leaving untested segments of the software (ISTQB, 2018).

- **Risk-Based Testing.** Risk prioritization can be skewed by bias. Visible risks may be exaggerated, while less obvious but critical risks may be ignored — creating strategically unbalanced coverage.

Thus, from an ISTQB perspective, bias is not just an abstract issue but a measurable risk factor directly impacting test scope, defect detection rates, and software quality. Moreover, in the age of AI these risks do not merely persist — they scale. GenAI tools can replicate a single bias across thousands of test cases, turning individual blind spots into systematic risks.

Technical Risks and Current Debates Around Paradigm Traps

In traditional ML/DL systems, risks clustered around data imbalance, neglect of rare scenarios, and limited explainability (Felderer & Beer, 2015). In the GenAI era, these risks expanded: measuring hallucination rates, verifying outputs, resisting adversarial prompts, and addressing the reproduction of cultural/ethical biases (Weidinger et al., 2022).

Currently, no fully standardized methodology exists to measure or classify these risks. However, several frameworks are emerging: fairness metrics such as demographic parity, equal opportunity, and equalized odds; IEEE P7003 standards; and evaluation benchmarks like TruthfulQA (Lin et al., 2023), Hallucination Benchmark (Ji et al., 2023), RealToxicityPrompts, and Stanford CRFM’s HELM (2022). While standardization is lacking, these academic efforts provide a rich foundation adaptable to software testing.

Notable metrics include:

- **Demographic Parity** – Ensuring outputs are evenly distributed across groups.

- **Equal Opportunity** – Equalizing error rates across groups.

- **Calibration** – Assessing the accuracy of model probability estimates.

- **Truthfulness / Hallucination Rate** – Measuring the frequency of false outputs in GenAI.

- **Robustness Under Adversarial Prompts** – Gauging resilience against manipulative or harmful inputs.

Inspired by the Scientific Method: The Popperian Approach

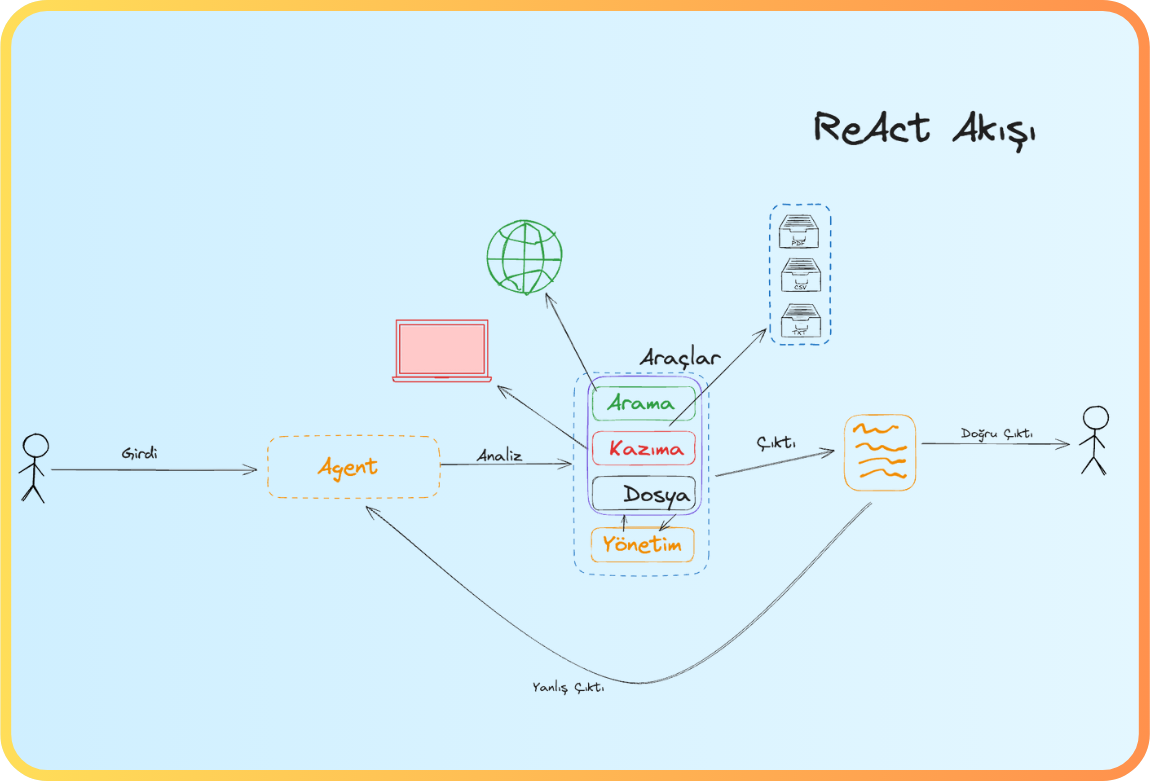

Karl Popper argued that science advances by falsification, not verification (Popper, 1959). For testers, this means designing scenarios not just to confirm assumptions but to challenge them — deliberately incorporating extreme and unexpected conditions. As Runeson & Andrews (2012) note, designing tests with falsification in mind reduces confirmation bias. Exploratory testing, as emphasized by Kaner, Bach & Pettichord (2002), embodies this principle in practice: testers go beyond scripted cases to actively search for unexpected behaviors.

The same principle applies to AI-assisted testing. Test cases generated by AI should not be accepted at face value; they must be critically examined. The key question becomes: *“Which possibilities are being left out?”* In this way, AI evolves from a passive assistant into an active partner, augmenting the tester’s critical judgment.

The Importance of Paradigmatic Inquiry and the Zero Trust Perspective

Questioning paradigms is never easy, as entrenched practices and cultural norms resist change. Yet for test engineers, this inquiry is no longer optional but essential. Continuously asking *“Why are we testing this way?”*, exploring beyond common scenarios, and critically evaluating AI-generated suggestions are not just technical skills but vital soft skills in the AI era. Critical inquiry prevents both human and AI-driven biases from becoming systemic, serving as a scientific safeguard for software quality.

The **Zero Trust** approach rejects unconditional trust in outputs. In software testing, this means that every scenario generated by AI or GenAI must undergo independent verification. Combining Popperian inquiry with Zero Trust principles makes AI-driven testing more reliable and sustainable (NIST, 2023).

Conclusion: Lessons from Kepler to the Age of AI

Kepler shattered a centuries-old paradigm by questioning assumptions and showing that planets move in ellipses, not circles. Software testing must take the same lesson to heart: when assumptions go unchallenged, both human and AI-driven test processes will perpetuate hidden errors. Especially today, as AI tools amplify biases at scale, paradigmatic inquiry transcends being a soft skill — it becomes the scientific safeguard of software quality.

References

- Barocas, S., Hardt, M., & Narayanan, A. (2021). *Fairness and machine learning*. http://fairmlbook.org/

- Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? *FAccT 2021*. https://doi.org/10.1145/3442188.3445922

- Bommasani, R., Hudson, D. A., & Liang, P. (2023). Foundation models in the era of generative AI. Stanford HAI. https://hai.stanford.edu/

- Felderer, M., & Beer, A. (2015). Addressing cognitive bias in software quality assurance. *ICSTW 2015*. https://doi.org/10.1109/ICSTW.2015.7107469

- Festinger, L. (1957). *A theory of cognitive dissonance*. Stanford University Press.

- ISTQB. (2018). *Certified tester foundation level syllabus, version 2018*. https://www.istqb.org/

- Ji, Z., Lee, N., Fries, J., & Liang, P. (2023). Survey of hallucination in natural language generation. *ACM Computing Surveys, 55*(12), 1–38. https://doi.org/10.1145/3571730

- Kahneman, D. (2011). *Thinking, fast and slow*. Farrar, Straus and Giroux.

- Kaner, C., Bach, J., & Pettichord, B. (2002). *Lessons learned in software testing*. Addison-Wesley.

- Kuhn, T. S. (1962). *The structure of scientific revolutions*. University of Chicago Press.

- Lin, S., Hilton, J., & Evans, O. (2023). TruthfulQA: Measuring how models mimic human falsehoods. *TACL, 11*, 1–20. https://doi.org/10.1162/tacl_a_00407

- Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. *Review of General Psychology, 2*(2), 175–220. https://doi.org/10.1037/1089-2680.2.2.175

- NIST. (2023). *Zero trust architecture and generative AI*. National Institute of Standards and Technology. https://www.nist.gov/

- Popper, K. (1959). *The logic of scientific discovery*. Routledge.

- Runeson, P., & Andrews, A. (2012). Cognitive biases in software testing. *IEEE Software, 29*(5), 18–21. https://doi.org/10.1109/MS.2012.107

- Stanford CRFM. (2022). *HELM: Holistic evaluation of language models*. https://crfm.stanford.edu/helm

- Stolee, K. T., & Robinson, B. J. (2020). Bias in machine learning models and its impact on software testing. *Journal of Systems and Software, 170*, 110717. https://doi.org/10.1016/j.jss.2020.110717

- Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. *Science, 185*(4157), 1124–1131. https://doi.org/10.1126/science.185.4157.1124

- Weidinger, L., et al. (2022). Taxonomy of risks posed by language models. *FAccT 2022*, 214–229. https://doi.org/10.1145/3531146.3533088

- IEEE. (2022). *IEEE P7003: Standard for algorithmic bias considerations*. IEEE Standards Association.

Back

Back