Artificial intelligence is significantly transforming not only how products are designed in the software development world, but also how products are tested. Traditional testing methods are facing a demanding challenge as expectations around speed, coverage, and accuracy continue to rise. In this challenging landscape, AI stands as a powerful ally for testers. On the other hand, evaluating the reliability, accuracy, and ethical alignment of AI-driven software has also become a key responsibility for testers. The reason behind this new agenda is that AI-powered products are systems that learn, adapt, and evolve over time.

In this article, we will focus on two main topics. First, we will explore how artificial intelligence contributes to the testing process. Then, we will examine how such systems should be tested.

1) How Does Artificial Intelligence Support Software Testing?

Artificial Intelligence (AI) technologies offer promising opportunities to enhance the efficiency and expand the scope of software testing processes. The ISTQB AI Testing Specialist Syllabus emphasizes how AI can specifically support automated testing and defect prediction. The main areas where AI contributes to software testing are listed below:

Automated Generation of Test Scenarios and Test Data

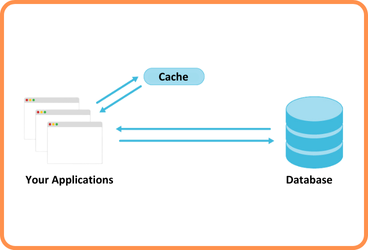

AI can analyze historical usage data and log files to derive new test scenarios and test data. For example, a machine learning model that processes web server logs can learn from user behavior to identify frequently occurring edge cases and generate corresponding test cases. AI-based tools can extract test scenarios from system flow diagrams or usage records, saving testers significant time. As a result, test coverage expands, and unexpected scenarios—those not manually designed—can also be validated.

Image Recognition and Natural Language Processing in Test Automation

Traditional automated testing tools typically identify UI components based on IDs or coordinates. AI, however, can recognize interface elements visually, similar to how a human eye perceives them. For instance, an AI-powered testing tool can classify buttons or icons at the pixel level and perform clicks accordingly. This approach reduces fragility in UI changes. Instead of relying on static location references, AI-based tools using object recognition provide more stable testing in dynamic interfaces. Similarly, AI can use natural language processing to understand user inputs in chatbot or voice assistant testing and generate automated responses.

Defect Categorization and Prioritization

AI can be used to categorize previously reported defects and predict the category of newly discovered ones. A machine learning model can analyze keywords in a defect report and historical data to determine which class the defect most closely resembles. In projects with large volumes of defect records, AI can automatically route new defects to the appropriate development team. This enables prioritization of critical issues among thousands of defects and accelerates resolution for similar problems.

Regression Test Optimization

Machine learning can analyze previous test execution results and code changes to predict which tests are likely to be affected by recent updates. For example, an evolutionary algorithm or statistical model can examine past failures in repeatedly executed test sets and identify redundant tests. An AI-based tool can analyze historical test data and recommend a high-priority subset of test scenarios for regression testing. This allows testers to focus only on critical tests that touch modified modules, resulting in significant time savings. In short, AI can reduce the test set size by eliminating unnecessary tests based on prior test activity knowledge.

Defect Prediction and Preventive Quality Analysis

AI can learn from source code metrics and version history to identify components with a high likelihood of defects. For instance, a machine learning model can use data such as code complexity, development history, and developer interactions to predict defect density in a specific module. AI applications can analyze defect patterns from similar projects and highlight risky areas in new projects. This enables test teams to focus limited resources on high-risk components and develop proactive testing strategies. If a module is flagged with “80% likelihood of defects in previous versions,” additional static analysis and in-depth testing can be planned for that module.

Considering the contributions listed above, AI is expected to significantly accelerate testing activities and improve software quality. A team using AI-powered defect prediction tools can anticipate which component is most at risk in the next release and write more tests for it. Similarly, AI tools that perform visual comparisons can detect unexpected changes in the application interface at the pixel level, revealing defects that might escape human observation. In short, when applied effectively, AI serves as a “smart assistant” that reduces the burden on testers.

2) How Should AI-Powered Software Be Tested?

Testing AI-powered systems presents challenges that differ significantly from testing traditional software. These systems are typically adaptive, learning-based, and often unpredictable, which introduces specific considerations into the testing approach. The ISTQB AI Testing Specialist Syllabus thoroughly addresses the quality attributes and challenges associated with testing AI-based systems. Based on my own experience, leveraging AI itself in testing such systems is often inevitable. Below are key aspects that must be considered when testing AI-enabled software:

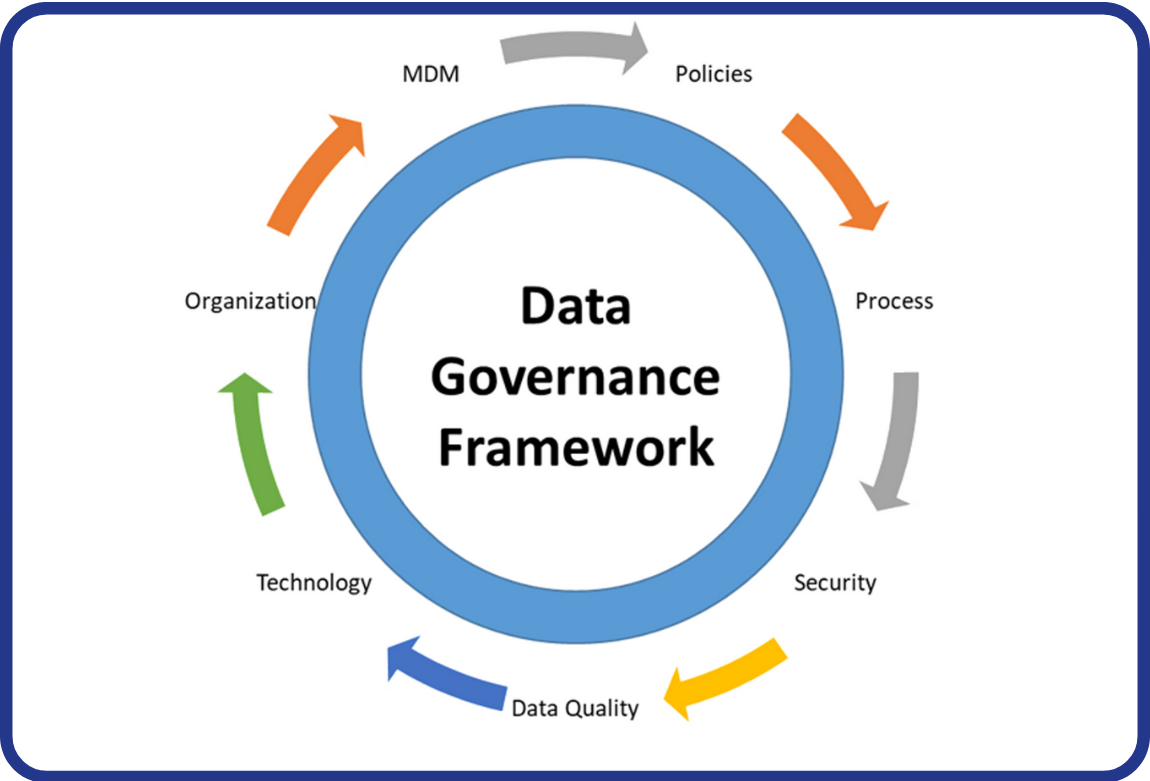

Data Quality and Bias

The success of AI models heavily depends on the quality of the training data used. If the training or test datasets contain errors, inconsistencies, or human-induced biases, the model’s outputs will also be flawed and biased. Therefore, testers must begin by examining the datasets used. Sampling bias and algorithmic bias are among the most critical data-related issues. For instance, imagine an AI-powered credit approval system trained only on data from high-income individuals. This could lead to unfair rejections of creditworthy applicants from lower-income groups—an example of inappropriate bias. During testing, scenarios representing diverse demographic groups should be executed to verify whether the model makes consistent decisions. Statistical analysis can help detect unwanted biases in training data, such as underrepresentation of certain customer segments. Experimental testing techniques can also be applied—for example, slightly modifying an input (e.g., changing only the gender field) and observing whether the output changes disproportionately. Ultimately, verifying that AI systems operate fairly and impartially is a critical part of the testing process.

Uncertainty of Expected Outcomes

In traditional software, expected outputs for given inputs are typically well-defined. However, in AI-based systems, defining a precise expected result can be difficult. For example, when submitting a photo to an image recognition system, we might expect the result to be “cat.” The model, however, may return: 92% cat, 5% fox, 3% raccoon. Such cases introduce the “oracle problem” for testers. One solution is to use tolerance-based comparisons—e.g., accepting the result if the model predicts “cat” with over 90% confidence. Techniques like input perturbation and output consistency checks can also help mitigate the oracle problem. In this approach, the input is intentionally modified, and the output is evaluated for expected changes. Instead of a fixed “expected result,” AI systems should be tested against a range of plausible behaviors. Test oracles must be defined to accommodate this flexibility.

Explainability and Interpretability

In conventional systems, developers can understand how the code works and explain the root cause of a defect. In contrast, complex AI models—such as deep learning—often function as “black boxes,” with internal decision-making processes involving matrix multiplications and activation values that are difficult to interpret. Testing the transparency, interpretability, and explainability of AI systems is especially important. Evaluating how well a model can explain its decisions builds trust. For example, tools like LIME can be used to understand why a credit scoring model assigned a low score to a customer. LIME reveals which input features contributed to the prediction and to what extent. A tester might observe that the model weighted “income level” at 70%, “age” at 20%, and “region” at 10%, and assess whether this distribution is reasonable. If the model assigns excessive weight to irrelevant features, this indicates a quality issue. Explainability testing should also be conducted from the user’s perspective. Is the AI system’s decision communicated in a way that the end user can understand? For instance, if a medical diagnosis system states “87% probability of condition X,” is that sufficiently clear for a physician? Such evaluations can be conducted through surveys or expert interviews. Ultimately, testers should investigate why the model made a specific decision and provide feedback to developers to improve the model or its explanations.

Testing Autonomous Behaviors

Autonomous systems—such as self-driving cars or unmanned aerial vehicles—make decisions based on complex inputs from the external environment. A critical aspect of testing these systems is verifying when and how control should be handed over to a human operator. For example, a Level-3 autonomous vehicle should relinquish control to the driver if lane markings disappear or sensors malfunction. Test engineers must validate this “handover to human” behavior by designing various scenarios. In dense fog, when sensor reliability drops, does the system issue a warning and request driver control? Conversely, does it panic unnecessarily in trivial situations? These cases should be examined using boundary value analysis and decision testing. If the vehicle can make decisions at 60 km/h but requires human intervention at 59 km/h, tests should focus around this threshold. The use of simulators is highly recommended in autonomous system testing, as replicating dangerous or rare real-world conditions may not be feasible. In summary, autonomous AI systems must have clearly defined criteria for when to declare “this situation exceeds my capabilities” and request assistance—and these criteria must be validated through testing.

Automation Bias

Another important aspect of testing AI systems that interact with humans is evaluating whether users place excessive trust in the system. Automation bias occurs when users accept intelligent system recommendations without question or fail to intervene even when the system makes an error. For example, if a clinical decision support system suggests an incorrect diagnosis and the physician accepts it without scrutiny, automation bias has occurred. Usability testing can help assess this issue. Scenarios are designed, and real users (e.g., doctors) are observed while interacting with the system. When the system provides misleading suggestions, do users hesitate? Do they override the system’s recommendation with their own judgment? If users perceive the system as infallible, this poses a serious usability risk. In such cases, making decision rationales more visible in the interface or enhancing user training may be necessary. Test reports should address the question: “Do users critically evaluate system recommendations?” and share relevant findings with stakeholders if needed.

Resilience Against Adversarial Attacks and Data Poisoning

Malicious actors may attempt to deceive AI models by crafting adversarial examples or corrupting training data. An adversarial example is an intentionally manipulated input designed to cause incorrect predictions. For instance, subtly altering a few pixels in an image—imperceptible to the human eye—can lead the model to misclassify it entirely. During testing, the model’s behavior against such adversarial inputs must be evaluated. As part of security testing, images with minor perturbations can be fed into the model, and classification outputs should be observed. If the model misidentifies a traffic sign due to a small defect (e.g., reading a “Stop” sign as “Speed Limit 90”), this indicates a serious vulnerability. Adversarial robustness testing can be conducted both by inspecting the model’s internals and by observing its external behavior. Similarly, data poisoning tests are critical. By injecting deliberately flawed samples into the training data, testers can monitor the model’s performance degradation. These tests help uncover security weaknesses and inform preventive measures such as data sanitization algorithms or adversarial training techniques.

Continuous Learning and Concept Drift

Some AI systems continuously learn and adapt to their environment. Over time, concept drift may occur—where changes in environmental conditions or data distributions cause the system’s decision patterns to deviate from their intended purpose. For example, if user preferences evolve but a recommendation system continues to suggest outdated options, its effectiveness diminishes. Testers should periodically reassess model performance to detect concept drift. It must be verified whether the model is retrained at appropriate intervals and whether it still performs accurately with updated data. Statistical process control can be applied by comparing prediction accuracy across recent and older time periods to identify significant drops. If the system updates itself online, these updates must also be tested to ensure they are error-free. Newly learned information should not corrupt previously acquired knowledge—similar to how regression testing validates system stability after changes. In short, the long-term consistency of AI-enabled software must be monitored as its ages or operates in changing environments.

Ethical and Legal Compliance

AI systems can exert manipulative influence over individuals through their decisions. Therefore, adherence to ethical principles and fair behavior must be part of the testing process. In automated decision-making systems—such as credit approval, recruitment, or judicial support—compliance with non-discrimination and accountability principles must be verified. If a system consistently gives negative outcomes to two candidates with identical qualifications but differing gender, this is ethically unacceptable. Such behavior is also prohibited under regulations like the EU General Data Protection Regulation (GDPR). Testers should create profiles representing different demographic groups (e.g., race, gender, age) with otherwise equal attributes and verify whether the system produces consistent outputs. Trusted AI assessment checklists can be used to evaluate ethical compliance. For example, the European Union’s AI ethics guidelines can serve as a reference, with items such as “Are decisions transparent and explainable?” or “Is human dignity respected?” If the system fails in these areas, findings should be highlighted in the test report and corrective actions recommended. Ultimately, AI-powered software must be not only technically sound but also socially and ethically acceptable—this falls within the scope of testing responsibilities.

Conclusion

The points above distinguish the testing of AI-enabled software from traditional approaches. Test teams must develop specialized test scenarios addressing data quality, model behavior, user interaction, and security dimensions. If necessary, novel testing techniques such as input variation, bidirectional testing, and exploratory testing should be applied.

In summary, artificial intelligence accelerates and enriches software testing processes. However, AI-based systems must be tested rigorously and comprehensively. AI offers intelligent tools that reduce the burden on testers and help detect more defects earlier. On the other hand, it is essential to ensure that AI systems are unbiased, explainable, and secure. This requires additional safeguards and techniques during testing. As emphasized in the ISTQB syllabus, technical accuracy alone is not sufficient for the success of AI-powered software. The system’s outputs must also be fair, understandable, and acceptable to users. Therefore, testers in AI-driven projects must be equipped with both engineering and ethical perspectives to ensure that these systems are not only intelligent but also trustworthy.

References:

1. ISTQB® Certified Tester – AI Testing (CT-AI) Syllabus, v1.0, October 1, 2021

– Official ISTQB syllabus for testing AI-based systems.

2. ISTQB® Certified Tester – AI Testing Syllabus, Turkish Translation, April 25, 2025

– Official Turkish translation of the CT-AI syllabus.

3. ISTQB® CT-AI Sample Exam – Questions/Answers, v1.2, December 8, 2024

– Sample exam questions and answers for the AI Testing certification.

4. ISTQB® Certified Tester – Foundation Level (CTFL) Syllabus, v4.0, April 21, 2023

– The latest ISTQB syllabus for foundational-level software testing.

5. ISTQB Software Testing Glossary, v1.0, 2014

– Standard glossary of terms used in software testing by ISTQB.

6. Garousi, V. et al. (2024). AI-powered Software Testing Tools: A Systematic Review and Empirical Assessment

– A systematic review and empirical evaluation of 55 AI-based testing tools.

– Access: arXiv:2409.00411

Back

Back