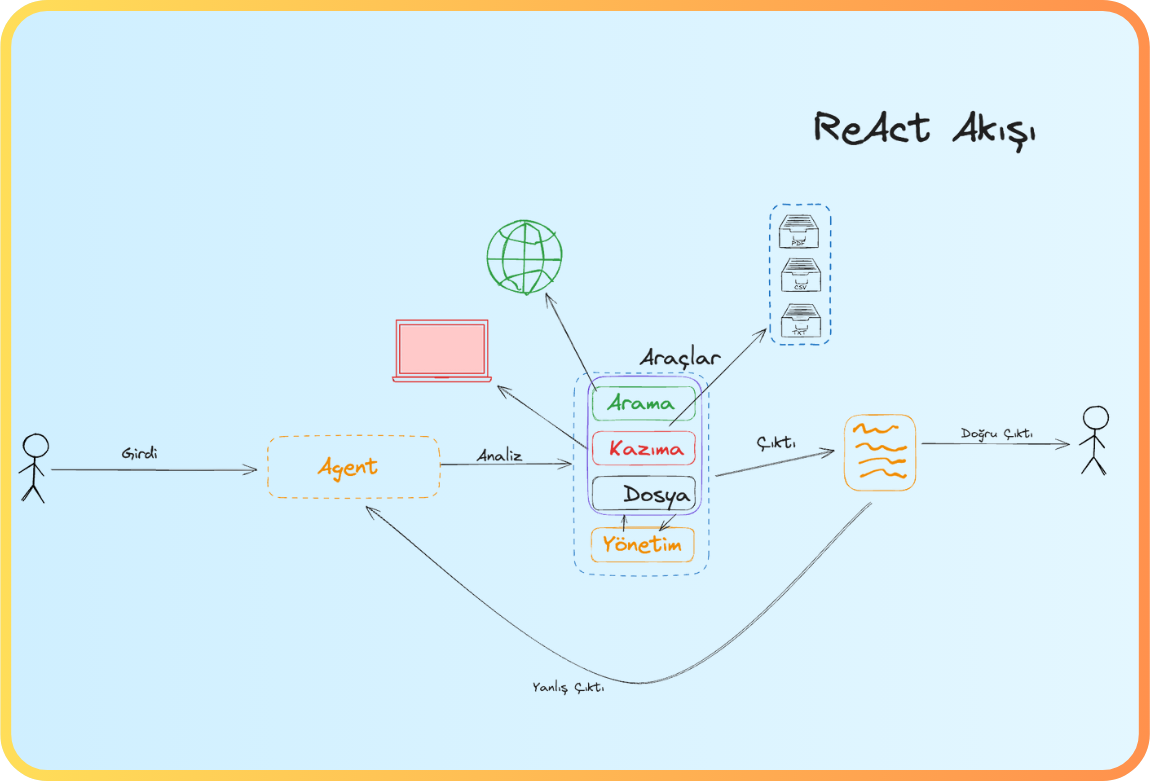

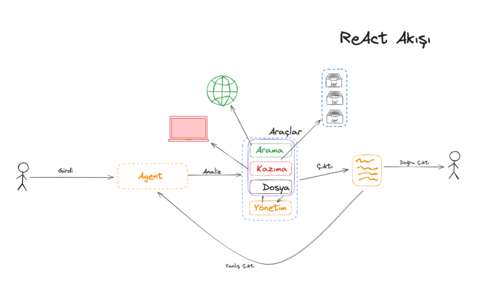

Artificial intelligence has evolved from being merely a talking system to a thinking system. Yet in recent years, one reality has become clear: thinking does not always lead to correct answers. Even the most advanced models today can confidently provide inaccurate or fabricated information. It doesn’t matter whether the domain is finance, healthcare, law, or education — incorrect information carries risk in every field. This raises the question: “Can AI be trusted?” One of the strongest answers to this question is ReAct.

Developed in 2022 by Yao, Zhao, Yu, and their team, ReAct (Reasoning + Acting) is an approach that encourages models not only to think, but also to question, verify, and update their plans when necessary. Released shortly before the debut of ChatGPT, this work laid the groundwork for autonomous systems such as AgentGPT and AutoGPT. ReAct is the first approach that essentially tells AI: “Check before you think.”

The Problem: Thinking Models That Don’t Investigate

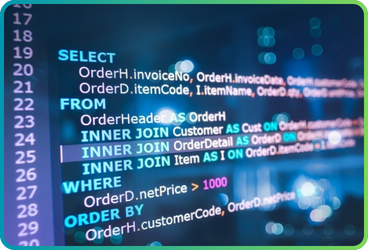

Language models have made significant leaps in recent years. They can now perform step-by-step reasoning. However, they still have a major limitation: observation. Models rely solely on their training data and cannot access new information. For example, if you ask, “Who won the 2025 World Cup?” and the model doesn’t have that data, it will guess — often resulting in an incorrect answer. This phenomenon is called a hallucination.

Hallucinations are not just technical glitches; they can have real-world consequences. A legal system could base a ruling on incorrect information, a healthcare system could recommend the wrong treatment, or a financial tool could produce faulty analyses. In short, if the information cannot be trusted, the full potential of AI collapses. Therefore, it became necessary to teach AI not only to think but also to question — and this is where ReAct comes in.

The Solution: Think, Act, Observe, and Reconsider

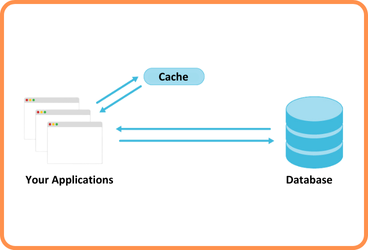

The philosophy of ReAct is simple: thinking alone is not enough; action is required. The model can access external sources, perform actions, observe outcomes, and update its decisions accordingly.

User Question: “In which field did Aziz Sancar win an Oscar?”

Traditional System: "I know the answer. Answer: X."

ReAct System: "I don’t know, let me check."

Action: Search Wikipedia for Aziz Sancar.

Observation: “Aziz Sancar, 1946–2025, Turkish doctor…”

Result: “Now I have verified information.”

This process enables the model to both reason and act. The model is no longer a closed box; it can interact with the outside world, verify information, and revise its decisions. This gives it a human-like learning behavior.

For example, if a user asks, “What are the latest AI-related legal regulations in Turkey?” a traditional system would guess. A ReAct model, on the other hand, searches, observes, compares multiple sources if necessary, and then generates an answer — significantly improving reliability.

Does It Really Work?

Research by Yao and colleagues shows that ReAct is not just theoretical. Accuracy in information verification tasks increased by 10–20%. In interactive tasks, such as e-commerce simulations — “Find a black leather laptop bag between 100–150 TL and compare prices” — success rates rose to 34%.

The reason for this improvement is simple: ReAct does not guess; it investigates. Hallucination rates decrease, trust increases. ReAct-based systems not only provide answers but also explain why the answer is correct.

In other words, ReAct enhances both accuracy and trust, which is critical in modern AI systems where reliability is the weakest yet most crucial link.

Every Power Comes at a Cost

ReAct is powerful but not flawless. Each verification step comes with a cost. Queries to external sources can slow the system down, and using external APIs may become expensive under high traffic. The system’s success depends on the quality of the sources — poor data can undermine ReAct’s effectiveness.

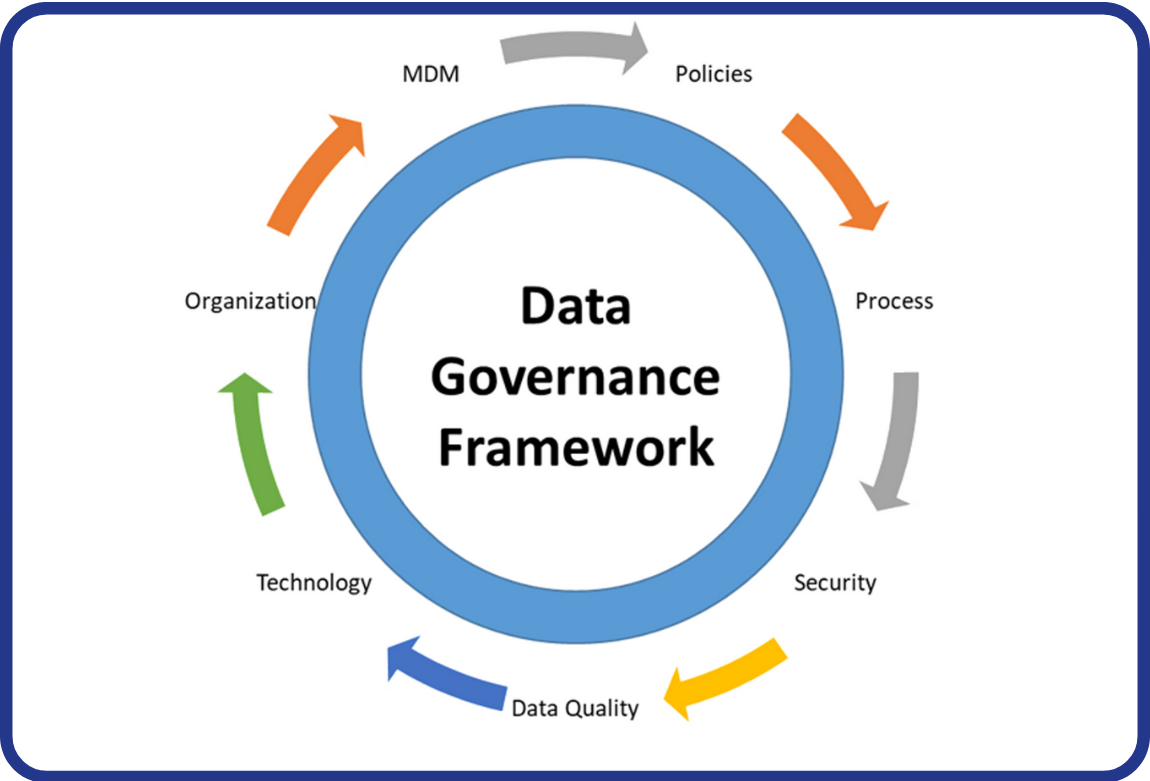

Another challenge is privacy. Because ReAct interacts with external systems, protecting personal or corporate data is essential. Therefore, the system must operate only with reliable, authorized sources. Proper integration also requires technical expertise, including API management, data access, and security policies.

Despite these costs, ReAct offers a significant advantage in areas where reliability is critical. Sacrificing speed for accuracy is a more sustainable strategy for many organizations, as systems that earn user trust gain a long-term competitive edge.

AI Is No Longer Just Thinking — It Is Investigating

ReAct represents not just a methodology but a revolution in approach. The model no longer only thinks — it observes, questions, plans, and revises its decisions when necessary. Like a human, it can reflect: “Where did I go wrong?”

This ushers us into the future: AI will not only produce information but also verify, explain, and take responsibility for it. ReAct lays the foundation for this new era.

In summary, ReAct makes intelligent systems not just smarter, but more honest, transparent, and reliable. Sometimes, the best answer is not the one given immediately, but the one that has been verified.

Going forward, we will explore the power of the ReAct approach further with the concept of DeepAgent.

References

Primary Article:

- Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K. R., & Cao, Y. (2022). ReAct: Synergizing Reasoning and Acting in Language Models. arXiv:2210.03629

Related Articles:

- Wei, J., et al. (2022). Emergent Abilities of Large Language Models. arXiv:2206.07682

- Brown, T. A., et al. (2020). Language Models are Few-Shot Learners. arXiv:2005.14165

- Ouyang, L., et al. (2022). Training Language Models to Follow Instructions with Human Feedback. arXiv:2203.02155

Back

Back