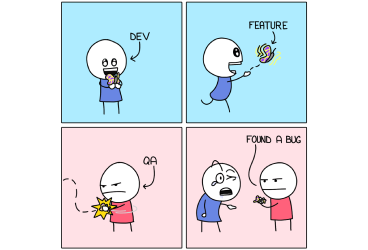

Using artificial intelligence not only to generate test scenarios or automation code, but also to maintain automation itself, represents one of the most effective and valuable applications of AI in testing.

Software test automation significantly reduces workload and provides confidence for regression testing. However, in fast-moving production environments, continuous product changes, environment shifts, or UI updates cause automation to require frequent maintenance. Teams that automate this maintenance effectively gain the maximum benefit from automation.

One of the most frustrating issues during automation execution is when a test passes not on the first run, but only on the second or third attempt—despite no changes in code or environment. Such tests, which do not yield deterministic results, are called flaky tests. Instead of asking whether to remove flaky tests from the suite, identifying the cause of failure using machine learning ensures full test coverage. Measuring the persistence and execution cost of this behavior using ML helps teams make better decisions.

Another situation in automation arises when the UI of the product under test changes. Even if locators are defined with stable IDs, a promotional popup appearing after login may cause all tests to fail. Should we write an if-else block for this, or can ML overcome it? Can an automation tool perform self-healing and—for example, in Testinium—find the object using Java and XPath at runtime?

AI Techniques in Software Test Automation Maintenance

1. Self-Healing, Flaky Tests, and Intelligent Test Management

Software test automation has become an essential component of modern software development. However, both academic and industry studies agree that maintaining automation is just as challenging as developing it. Frequent UI changes, asynchronous behaviors, and CI (Continuous Integration) complexities rapidly increase maintenance costs. AI-supported approaches emerge as one of the most effective ways to automate maintenance.

Why Does Test Automation Maintenance Require AI?

Research indicates that a significant portion of failing automation tests stems not from real product defects but from issues within the tests themselves [1] Such as

-

Fragile locators

-

Timing problems

-

Flaky behavior

These issues lead to misleading failures and substantial developer time loss in CI/CD pipelines. Parry et al. experimentally demonstrated that flaky tests significantly decrease developer productivity.

2. AI-Based Maintenance for Flaky Tests

Techniques Used

-

Supervised machine learning (Random Forest)

-

Hybrid models (rerun + Machine Learning)

The CANNIER approach detects flaky tests using a hybrid combination of ML and rerun strategies—rather than relying on only one technique—achieving detection up to 10 times faster than classical methods [1].

|

Method |

Issue |

|

Continuous re-run |

Accurate but very slow |

|

Pure ML |

Fast but has a risk of misclassification |

Table 1. Reasons for the CANNIER Approach

Maintenance Contribution:

The ML model identifies the probability of a test being flaky. This allows reruns to be applied only to candidate tests. Flaky tests can automatically be labeled, quarantined, or prioritized for maintenance, significantly reducing manual analysis effort.

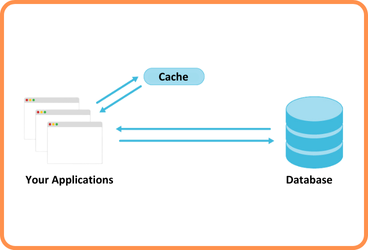

Figure 1. Flaky Test Cycle with Artificial Intelligence

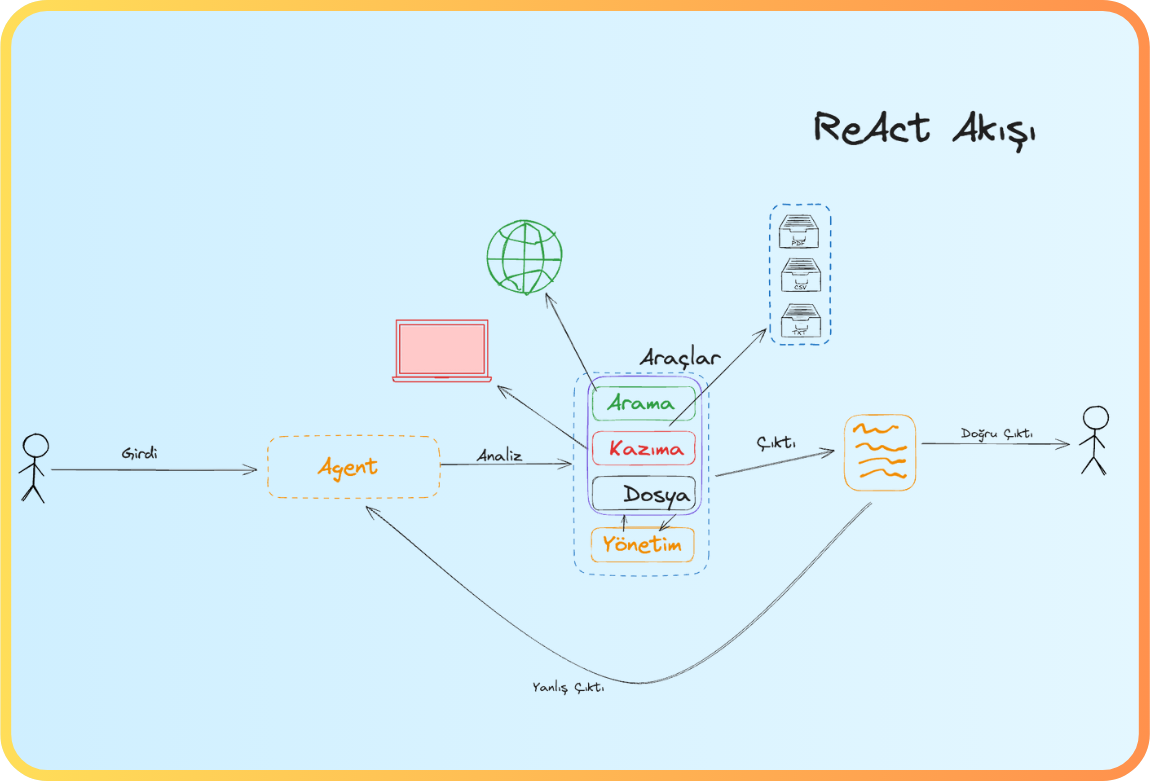

3. Self-Healing Tests

Self-healing test automation automatically detects alternative locators when an element cannot be found and uses the best candidate during runtime.

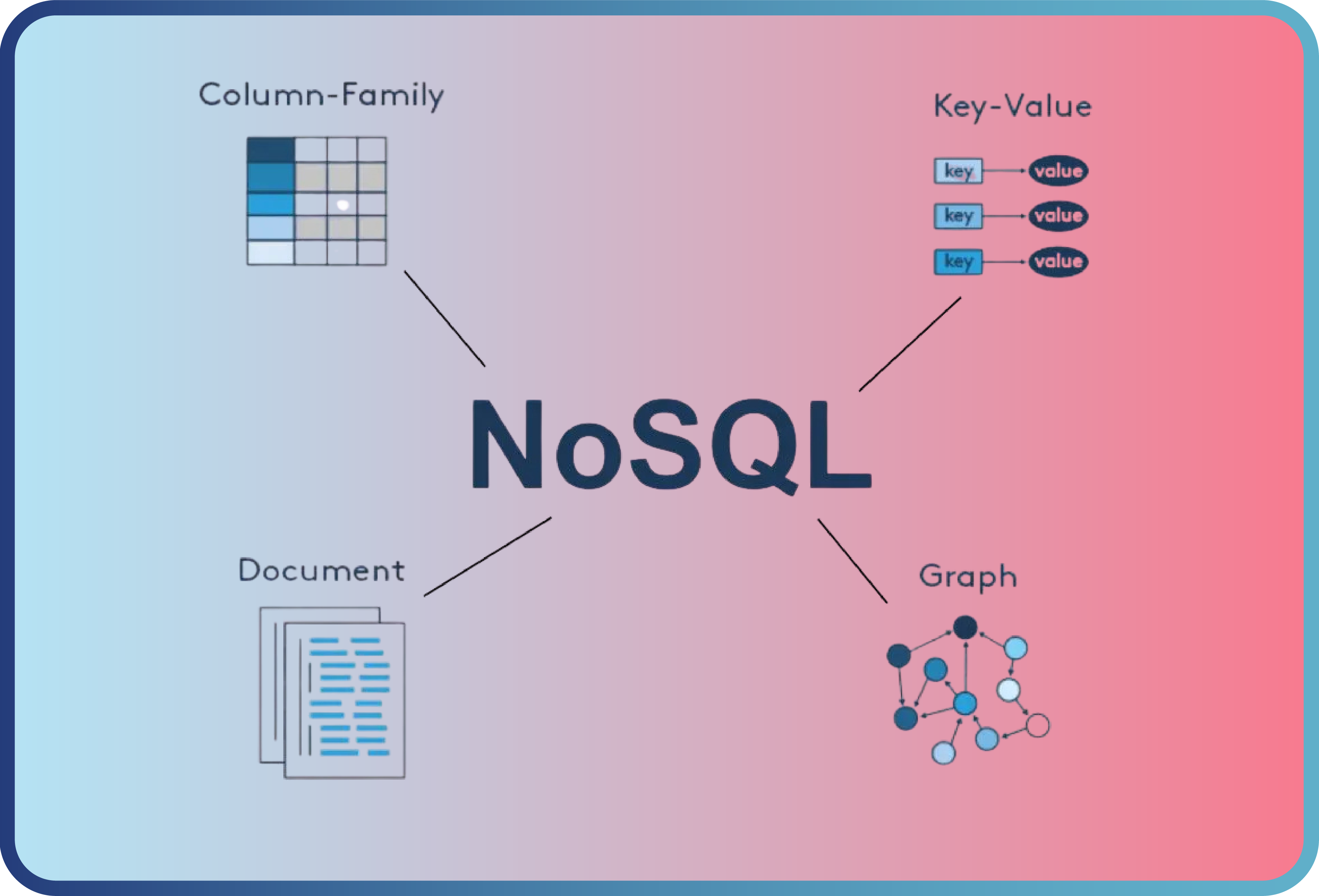

Figure 2. AI Workflow for Finding Alternative Locators

Techniques Used:

-

Document Object Model (DOM) tree similarity

-

Attribute-based similarity (attribute matching)

-

Deep Learning (CNN (Convolutional Neural Network) + visual UI recognition)

-

Reinforcement Learning for adaptive healing

Figure 3. Techniques Used in Self-Healing

Additionally, Kavuri (2023) demonstrated that autonomous ML agents significantly reduce flaky tests through real-time test repair.

Maintenance Contribution:

Simple UI changes no longer require rewriting test scripts; the system adapts automatically.

4. AI for Test Selection and Prioritization

Running the entire test suite on each execution is expensive and slow.

Techniques Used

-

Change-based ML models

-

Ranking algorithms

-

Reinforcement Learning (RL)

At Facebook, Predictive Test Selection reduced test infrastructure costs by 50% while maintaining more than 95% fault detection.

Pan et al. showed that RL-based prioritization performs more stably than classical ML techniques.

Maintenance Contribution:

Fewer tests, faster feedback, and more manageable maintenance.

5. Risks and Mitigation Strategies

|

Risk |

Strategy |

|

Erroneous selfhealing |

Confidence threshold + human approval |

|

Overautomation |

Hybrid (AI + rulebased) approach |

|

Data dependency |

Continuous model retraining |

Table 2. Risks and Strategies in AI-Based Test Automation Maintenance

6. Conclusion: Artificial Intelligence–Enabled Maintenance Is a Necessity

Academic and industrial results are clear: using AI for test automation maintenance is no longer experimental—it is a requirement for scalable software development.

Maximum benefit is achieved by combining:

-

Self-healing

-

Flaky test detection

-

Intelligent test selection

The combined use of these approaches yields the best results. When we look at the other findings, it has been observed that test automation frameworks—especially those where objects are visually identified and coded—benefit significantly from these methods. However, due to selfhealing, some real defects may occasionally go undetected, which reduces confidence in the application and introduces risk. If your objects are identified using IDs, the extent to which selfhealing can help you is something you can determine only through experimentation. This depends both on your product and on the AI solution you are using.

As we mentioned at the beginning, achieving the maximum benefit from automation depends on using artificial intelligence most effectively in maintenance activities. Current AI solutions, tools, automation frameworks, and our capacity to use AI effectively are not far from this goal—however, in terms of consistency, reliability, and functionality, we still have some progress to make.

References:

-

Parry et al. (2023). Empirically Evaluating Flaky Test Detection Techniques.

-

Saarathy et al. (2024). Self-Healing Test Automation Framework using AI and ML.

-

Kavuri (2023). Autonomous ML Agents for Real-Time Test Maintenance.

-

Machalica et al. (2018). Predictive Test Selection.

-

Pan et al. (2025). Reinforcement Learning for Test Prioritization.

Back

Back